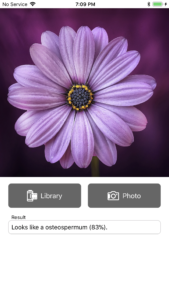

I just got done doing some benchmarking using the Oxford102 model to identify types of flowers on an iPhone 7 Plus from work. The Oxford102 is a moderately large model, weighing in around 229MB. As soon as the lone view in the app is instantiated, I’m loading an instance of the model into memory, which seems to allocate about 50MB.

The very first time the model is queried after a cold app launch, there is a high degree of latency. Across several runs I saw an average of around 900ms for the synchronous call to model to return. However, on subsequent uses the performance increases dramatically, with an average response time of around 35ms. That’s good enough to provide near-real-time analysis of video, when you factor in the overhead of scaling the source image to the appropriate input size for the model (in this case, 227×227). Even if you were only updating the results every 3-4 frames, it would still feel nearly instantaneous to the user.

From a practical standpoint, it would probably be a good idea to exercise the model once in the background before using it in a user-noticeable way. This will prevent the slow “first run” from being noticed.